Disinformation is false information deliberately spread to deceive people. Disinformation is an orchestrated adversarial activity in which actors employ strategic deceptions and media manipulation tactics to advance political, military, or commercial goals. Disinformation is implemented through attacks that weaponize multiple rhetorical strategies and forms of knowing—including not only falsehoods but also truths, half-truths, and value judgements—to exploit and amplify culture wars and other identity-driven controversies.

- In the ‘About’ section of this post is an overview of the issues or challenges, potential solutions, and web links. Other sections have information on relevant legislation, committees, agencies, programs in addition to information on the judiciary, nonpartisan & partisan organizations, and a wikipedia entry.

- To participate in ongoing forums, ask the post’s curators questions, and make suggestions, scroll to the ‘Discuss’ section at the bottom of each post or select the “comment” icon.

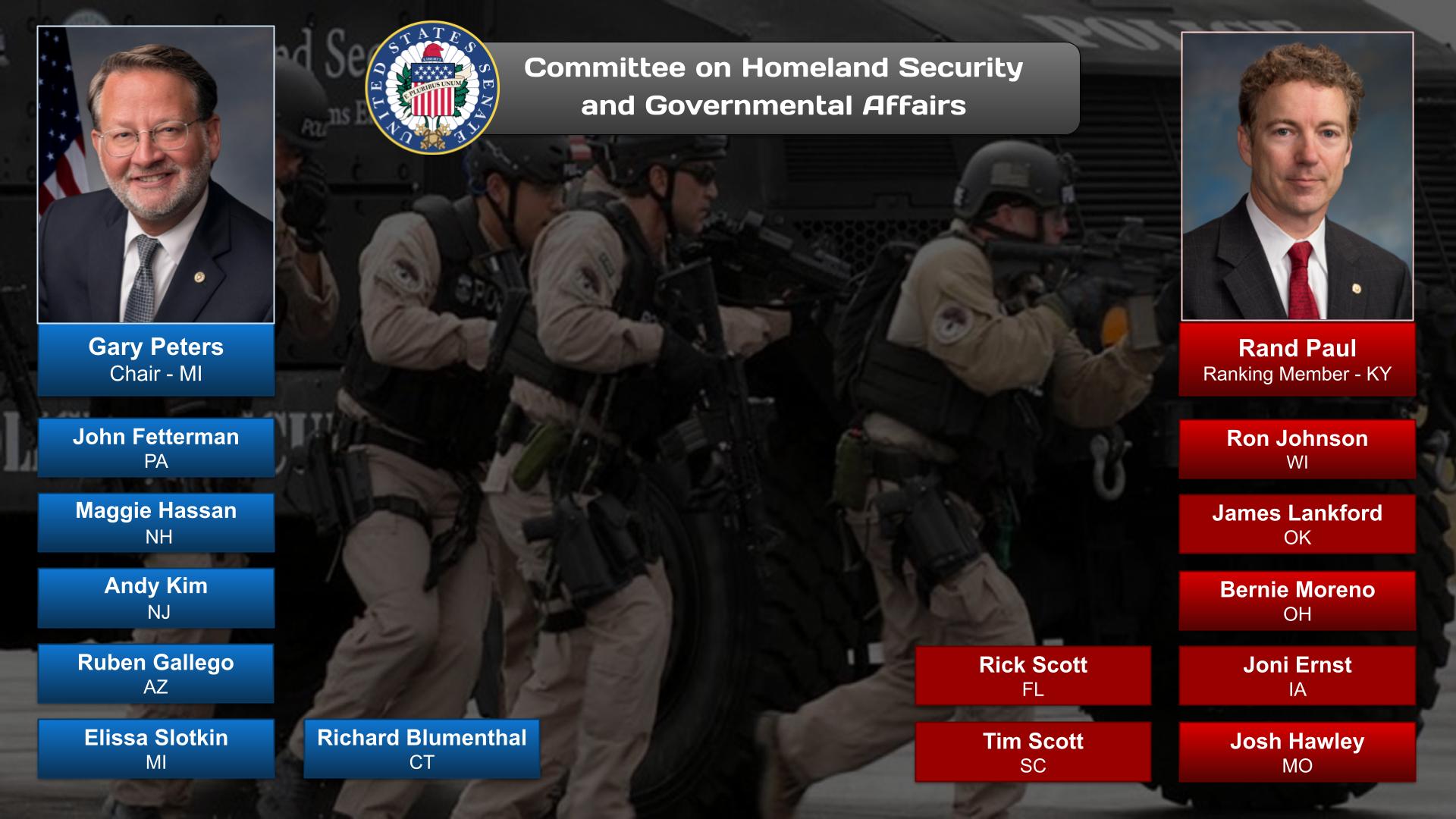

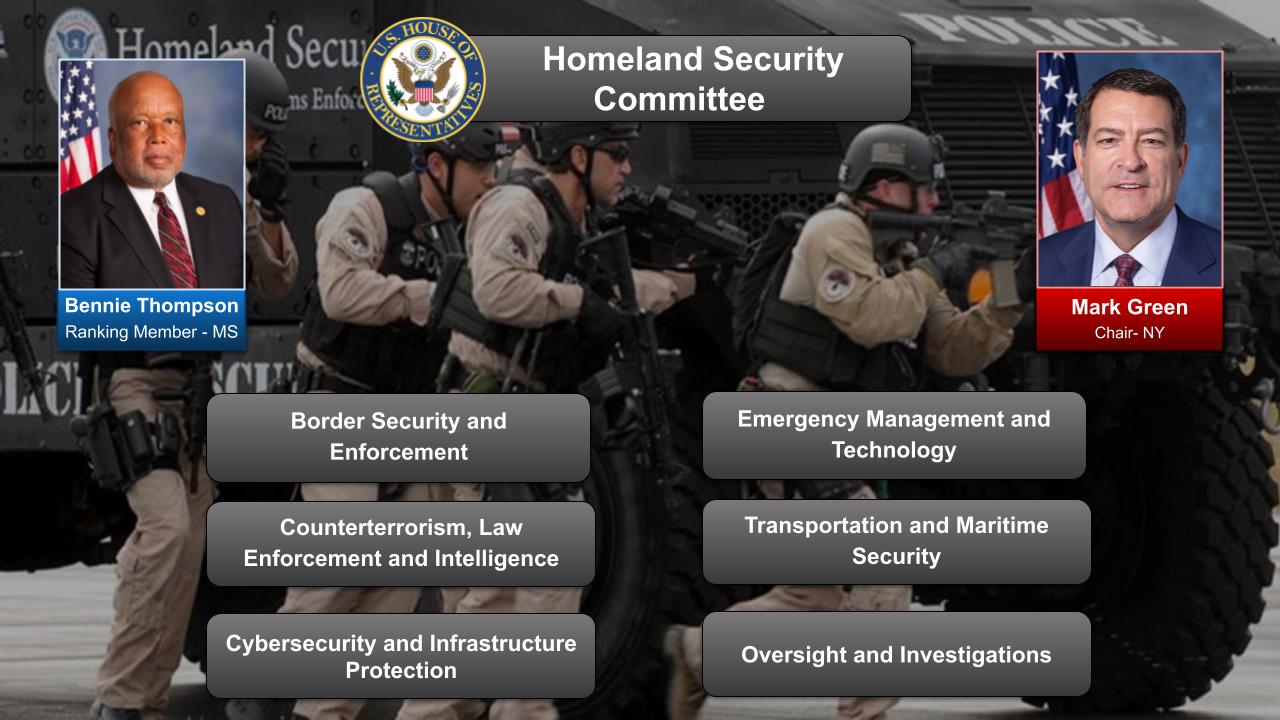

The Disinformation category has related posts on government agencies and departments and committees and their Chairs.

(10:28)

https://www.youtube.com/watch?v=3vpfKZ-7ir4

Half of U.S. adults say they sometimes get their news from social media. However, almost two-thirds of adults say they view social media as a bad thing for democracy. This raises the question of what responsibility social media companies bear for our increasingly divided political climate. Judy Woodruff explores that more for her ongoing series, America at a Crossroads.

OnAir Post: Disinformation